Is it "AI" Bias or is it just "Bias" and Bad Social Science?

The Veteran Affairs Department's REACH VET Suicide Prevention "AI" tool

Good morning everyone. I promised on LinkedIn yesterday that I would write a blog about the Veteran Affairs (VA) Department’s use of the REACH VET predictive model, which is used to predict the likelihood of suicide in veterans receiving VA care. REACH VET (hereafter ‘the tool’) predicts and identifies those most likely at risk of attempting suicide and alerts providers so that they may intervene before the veteran attempts to harm or kill themselves. Yesterday, Military Times reported that the tool in question does not take into consideration a veteran’s history of Military Sexual Trauma (MST), and it heavily skews towards biasing for intervention for white men. Let’s look at what is going on here.

Here are my up front caveats. First, I think such a tool is a wonderful idea and an opportunity to help Vets. The VA is often swamped, underfunded, and tools like this can help to alert them to problems they may not see, especially when there is a time-sensitivity to intervention. So, I’m not here to say “get rid of REACH VET”. Second, while this post is going to cover the topic of Military Sexual Trauma (MST) as a missing variable in the predictive model, the problem with this model goes far beyond just including MST as a variable. The social science around sexual assault and harassment is such that we are always working in probabilities and not exact numbers due to the fact that there is not a complete dataset. People don’t report the majority of the time. Moreover, the military as an organization and a culture is extremely different than other groups (say, for example reports on sexual assault on college campuses). While it is certainly true that the median age of enlisted DoD personnel is about 26 years old, and there are a fair number of younger 18-24 year olds, unlike college campuses, service members face vastly different constraints than the garden variety co-ed. First amongst them - they can’t just leave (without serious punishments). Additionally, the demographics of the military are themselves already skewed. The numbers highlight this pretty clearly. Finally, I’m going to tackle this issue from a social science methodology approach. While this topic is so ethically charged, I’m going to attempt to look at it from an angle that allows me greater emotional distance.

However, I will note up front - I was the ghost author for the Defense Innovation Board’s AI Ethics Principle project for the DoD. Those 5 principles were adopted by the then SecDef for the DoD in February of 2020. The second principle listed is “Equitable”. That principle states that the DoD will take “deliberate steps to minimize unintended bias in AI capabilities.” I’m often asked what the Equitable principle really does for DoD… and well, here is a very good case. (Yes, the VA is another Department and not DoD, but we can see the links, yes?) When this principle was under discussion both internally and externally to DoD, the term “fairness” was rejected for a number of reasons. But the content remains - this is an issue where the use of a predictive AI model to identify veterans and provide those individuals with resources is biased… and I would hope that it is *unintentionally* biased. That is to say, this was just some rather myopic thinking and bad social science and not an outright attempt to skew/bias the results (which frankly, are merely in lock step with the data available to the model). With the White House’s demands for fairness and equality when it comes to AI, as well as the earlier DoD AI Ethics Principles, it seems to me that the VA has some work to do.

OK. So from what I can gather, here is the state of play with REACH VET, they wanted to be able to identify those Vets most at risk of suicide. They initially started using good-old-fashioned statistics and econometric models, but they figured that machine learning would give them some extra oomph and so transitioned to that approach. (I’m still uncertain why, given the state of their variables… but let’s not dwell). Here is their stated strategy:

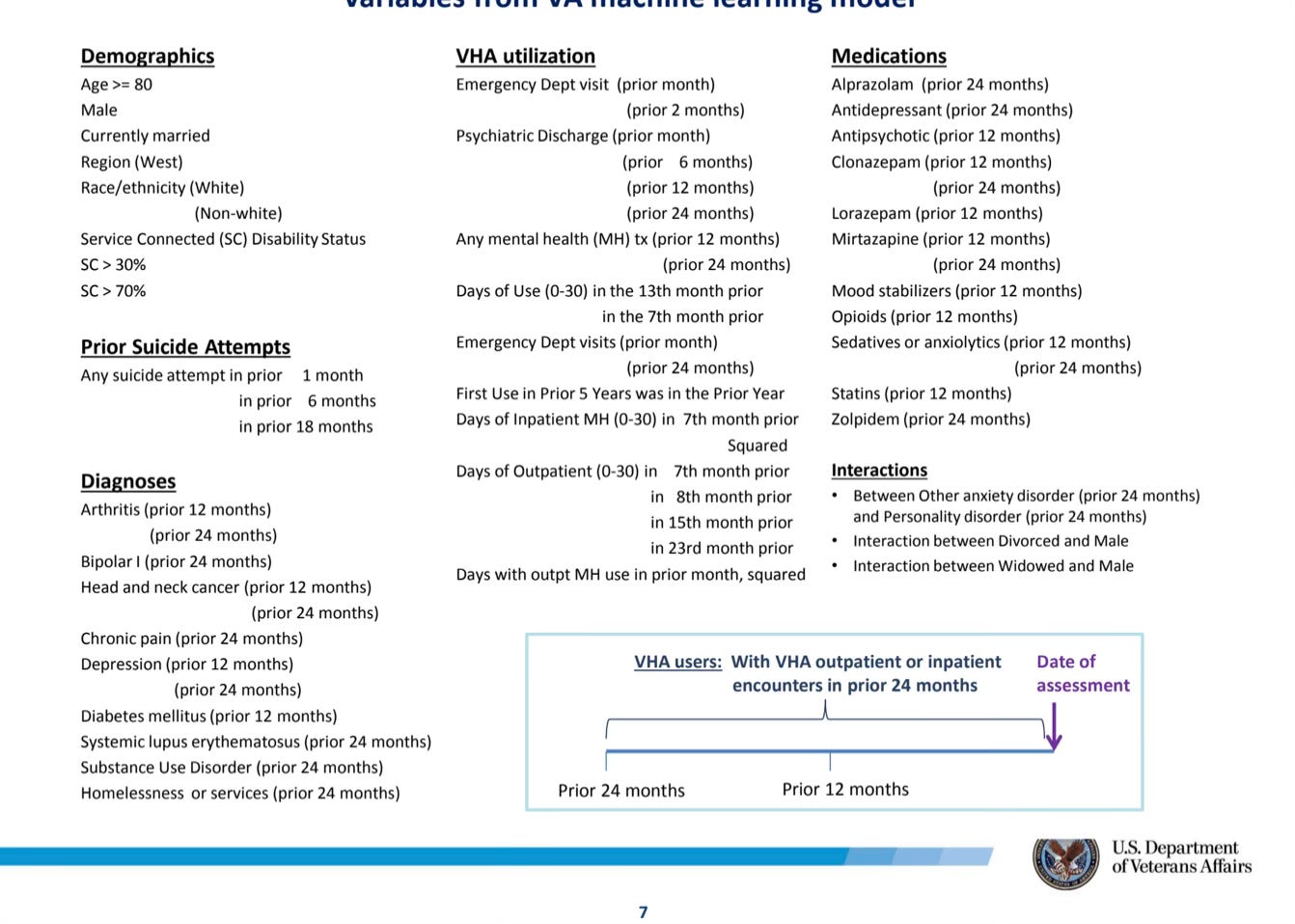

The initial studies of the program utilized a population distribution that looks like this: 93% male, 7% female. So, we are pretty highly over-represented to start. But here is a quick view of what the variables look like (as disclosed):

Notice dear reader…. There are two variables for race. RWhite and RNWhite. This is a deliberate choice by the researchers. First, the DoD and the VA have finer grained detail on the demographics of their people. For example, in most* cases we see race reported along these lines: White, Black or African American, Asia, Native American or Alaska Native, Hawaiian or Pacific Islander, Multi-Racial, and divisions between Hispanic or Latino or Non-Hispanic or Latino.

So! First methodological decision is to collapse all of the other racial categories into White and NonWhite. (I’m guessing the R is for Respondent). What is the racial composition of the (almost) current forces for the DoD? Well…. Of the roughly 2.5 million active duty military, the total force is about 70% White. Of the men… 73% are White. Active duty officers are about 78.5% white. If you include White and Non-Hispanic or Latino that figure raises to about 91.6%. Now, when it comes to the VA, we have a different set of concerns about population. The Veteran Health Administration has about 9 million Vets that it serves. That is about 1/2 of all of the Vets living in the US. The predominate racial category for Vets is White (about 78%).

Ok, so the Vet population sort of tracks with the DoD population (unsurprising… you have to be active duty in DoD to become an eligible Vet). But, the REACH VET tool skews this WAAY further, with the distribution of their population being 93% male to 7% female. Now, one could object here and say that well, that is what the data distribution looks like for the subset of Vets they were looking at — i.e., those Vets that were identified as high risk for suicide. OK. Sure. But let’s put a pin in the issue of how one even BECOMES identified to begin with to be considered for that pool. We’ll get back to that in a minute. Right now, we have the “tool” collapsing all race data into White/NonWhite. There is no methodological reason to do this. You can get finer grained detail with the data if you have it. This just seems to me to be rather lazy coding to get a binary variable to do logit or probit likelihood estimations. Unfortunately, this way of thinking does little to actually help anyone.

Here is another rather odd discrepancy when looking at the variable list/table: Look for the variable SEX. Did you see it? Let me help you.

Notice? There is only 1 variable. SEXM. Where is the SEXF? They have it if they are including women in the sample. But they don’t report the coefficient. Why? is it because it was so small that it wasn’t statistically significant? I still want to see it. So here we have another methodological choice.

OK, so here is what you will see if you look at the REACH VET tool:

Now, clearly the risk of suicide is influenced by a myriad of factors. And, Vets are a distinct population within the larger set of people at risk. Ok. But I want to know why the researchers at the VA and the National Institutes of Mental Health (NIMH) decided that they were not going to look ANY FURTHER at other variables than the 61 they did… particularly Military Sexual Trauma.

This seems to me to be evidence of NOT the AI tool being biased, but the entire methodological preferences of the human researchers building the tool.

We clearly know that victims of sexual assault and violence are actually at a higher risk for suicidal ideation and attempts. In fact some statistics put this around 33% of individuals who are victims to contemplate suicide and 13% to attempt it. Couple this data with data from the military on sexual trauma and you start to see some worrying trends.

We know that sexual assault, contact, or harassment is underreported, particularly in the military. So, if we were to say that reporting rate is about 25%, meaning that only 1 in 4 service members report, we have a pretty low reporting rate. If roughly 75% of MSTs go unreported, then you aren’t getting the data on what is happening, when, why, by whom, etc. Moreover, we know that MST can affect both men and women, with women suffering at about twice the rate as men. If we take the DoD’s reporting on sexual assault for FY23, we have the estimate that 8,515 reports filed. But, if we know that that is only 1/4 of the actual assaults, then the true population is more like 34,000 sexual assaults (to say nothing of the other forms of MST).

The subject pool for REACH VET only included 12,049 women in its analyses, or roughly 7%. Of the 9 million of vets receiving care, about 800,000 of them are women. Again, about 8%. Women make up about 17.5% of the active duty force. So, we have some neither here nor there on whether the population sample for the tool is representative and OK.

Here is the problem though. Again, both men and women experience MST. And, if we believe that we need to report INCIDENTS and not merely the demographics, then we have a big problem. Incidents for one year could be around 34,000. So, we have around 425,000 active duty women. They suffer rates about twice as much as men. The math does seem to matter here.

Also, MST is a major contributor to mental health decline and the likelihood of suicidal ideation and attempts. So, we know that MST is a serious factor for those people who have experienced it. But, it appears that because the data is so highly skewed to be white and male, the prevalence of that information will not show as statistically significant? (if that is what the researchers thought/found when they decided to EXCLUDE IT.)

But wait.. that isn’t right. Because the model isn’t even ACCOUNTING FOR IT. The model is not going to identify individuals who are at a high risk for suicide if the variables are not included in the model! I can’t predict what affects someone’s likelihood of committing suicide (a binary dependent variable) if the independent variables are limited to variables that do not get at the underlying reason for wanting to commit suicide in the first place.

The model looks at whether someone has ever tried to commit suicide (and they are honestly reporting) in the last 1, 6 or 18 months. If you fall outside of those time periods, then you aren’t telling them if you have *ever* in your life attempted it.

What is more, as more women are entering into the VA’s care, there is more than likely going to be a different set of demographic variables at play. There will be not only more women, but more women of color (or Non-White for REACH-VET). Additionally, sexual trauma can show up at any time, and it can be triggered by a wide swath of factors. Given that we have increases in MST reporting within DoD, that means we know it is happening… but the data on the reporting is rather bleak from a trauma perspective.

Here is some more data: In 2012 of the reports made, 68% of those reports resulted in criminal charges. By 2022, this decreased to 37%. We see the opposite trend in nonjudicial punishments. In 2012, we see an 18% rate of nonjudicial punishments for reports of MST. By 2022, we have that increase to 35%. The reason offered? Victims of MST do not want to be retraumatized by a trial. Which points to all those other horrible factors that revolve around MST in military culture and chains of command - such as fear of retribution. But even for those victims who report… the very structures for support are not that great. Just look at the satisfaction ratings for the support networks — 58% of victims are satisfied with victim advocates, 51% are satisfied with legal counsel, and 59% are satisfied with their response coordinators. Flip that on its head. We are basically saying that 1/2 of people are satisfied and 1/2 are not. Those are the odds of flipping a coin. So, a victim musters the courage to report, and then he or she can flip a coin as to whether or not the processes are actually going to help. If they don’t, then they leave or are discharged. They may then end up in the VA for MST related health issues.

I know I’m offering a lot of statistics here, but here is the main issue I have - if you are going to offer a tool that provides you with insights into how to provide an ADDED BENEFIT to a class of vulnerable people, then you better make sure your tool actually identifies everyone equitably. The way this tool is constructed is made by a series of methodological choices, and those choices are informed by not merely the availability of the data, but by cultural norms and biases. If the tool then represents these biases, and you give the tool data that reflects the structural biases, then you are going to get biased results. This is no giant secret and it does not require AI to do it.

Sure, one could object and claim that even if we included MST in the data, it is not statistically significant. The VA does have this data! It asks every individual who comes through its door for care whether they have been a victim of MST. It might not be perfect, but it is there. And, what really chaps my hide is that the VA already KNOWS that it needs to have specialized approaches for women veterans! They published a paper on it!

So why, pray-tell, is this not included in REACH-VET? Since now we ARE talking about AI!! You can have a machine learning algorithm that includes a vast amount of data, even unstructured data, to look for more patterns. What is more, if you know your data is skewed (i.e. biased), you can actually weight elements more heavily to make up for this skew. The entire thing is maddening to me. The right hand has no idea what the left is doing, or no one is looking or seems to care.

I am troubled by some of the methodological choices made by the REACH VET modelers. I’m more troubled that no one in all of the public reviews of the model (GAO, NIMH, academic peer reviews) seemed to notice some glaring issues with the variable constructions and the distribution of the data. And as always, I’m worried at the extent to which people buy cars by how they look without ever looking under the hood.

Here is to hoping….